[ad_1]

Yesterday, we noticed the start of the brand new period of AI. OpenAI introduced Sora, its new text-to-video AI mannequin that turns easy prompts into strikingly reasonable video.

Based on Open AI, Sora is constructed on a mannequin with deep understanding of language, which permits it to create shifting photographs that adhere to the physics of actuality. “The mannequin understands not solely what the consumer has requested for within the immediate, but additionally how these issues exist within the bodily world,” OpenAI wrote in a blog post.

Given a immediate that outlines the characters, location, emotion, and even filming fashion you’re searching for, the mannequin can generate a video that’s as much as a minute lengthy and incorporates a number of characters and photographs.

“Sora is able to producing complicated scenes with a number of characters, particular sorts of motion, and exact particulars of the topic and background,” says OpenAI.

The corporate is just not exaggerating.

Not like earlier industrial generative-AI engines, which merely imitated patterns, OpenAI claims that Sora understands actuality. That an AI mannequin can deduce how “issues exist in the true world” is a placing and monumental second for AI era. It’s what permits Sora to supply near-perfect movies. Nevertheless it’s additionally bringing us one step nearer to the end of reality itself—an period of post-truth the place completely nothing we see on our telephones and computer systems will likely be plausible.

Placing Sora to the take a look at

Yesterday, OpenAI’s CEO Sam Altman had causes to be ecstatic, boasting on X about Sora’s extraordinary skills, and alluring individuals to recommend prompts for his new favourite AI beast earlier than publishing the outcomes somewhat later. Like these two golden retrievers podcasting on a mountaintop:

https://t.co/uCuhUPv51N pic.twitter.com/nej4TIwgaP

— Sam Altman (@sama) February 15, 2024

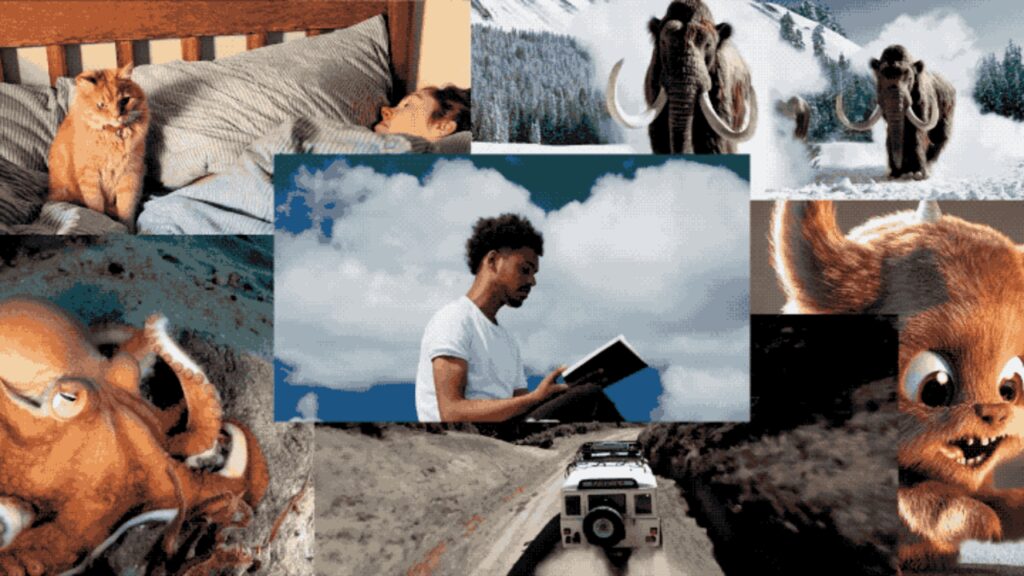

It’s spectacular. Even whereas the decision feels low, the picture appears secure, feels reasonable sufficient. The curated examples within the OpenAI web page (as proven within the compilation video beneath this paragraph) are far more spectacular. The definition is just superior, surpassing something produced by the earlier king and queen of the generative AI video world, Runway and Stability.

Take the instance of a lady strolling via a metropolis on the 7:19 mark in OpenAI’s video. Her demeanor, the sun shades, the individuals within the background, the neon indicators, the water reflections . . . your mind buys it fully.

It’s solely while you pay shut consideration—or while you see the non-curated stuff that Sora doesn’t fairly get proper, as within the video beneath—you can recognize that we aren’t there fairly but. Sora’s seams are nonetheless seen.

even the sora errors are mesmerizing pic.twitter.com/OvPSbaa0L9

— Charlie Holtz (@charliebholtz) February 15, 2024

Sora brings us nearer to breaking freed from the current generative-AI aesthetic that is already so tired, however it doesn’t fairly get us close to the Goldilocks zone by which there isn’t any AI aesthetic in any respect. Whereas it’s not an ideal generative mannequin, it’s undeniably an awesome leap. What feels clear to me is that we’re about to take the final step into the abyss, the place visible actuality is a blurry idea, at finest. Sora has introduced us to this leap level. In a number of months, you may anticipate different synthetic-reality engines to up the ante till one outputs photographs and video completely indistinguishable from the “actual” actuality that we are able to see with our personal eyes. It’s inevitable.

[ad_2]

Source link