[ad_1]

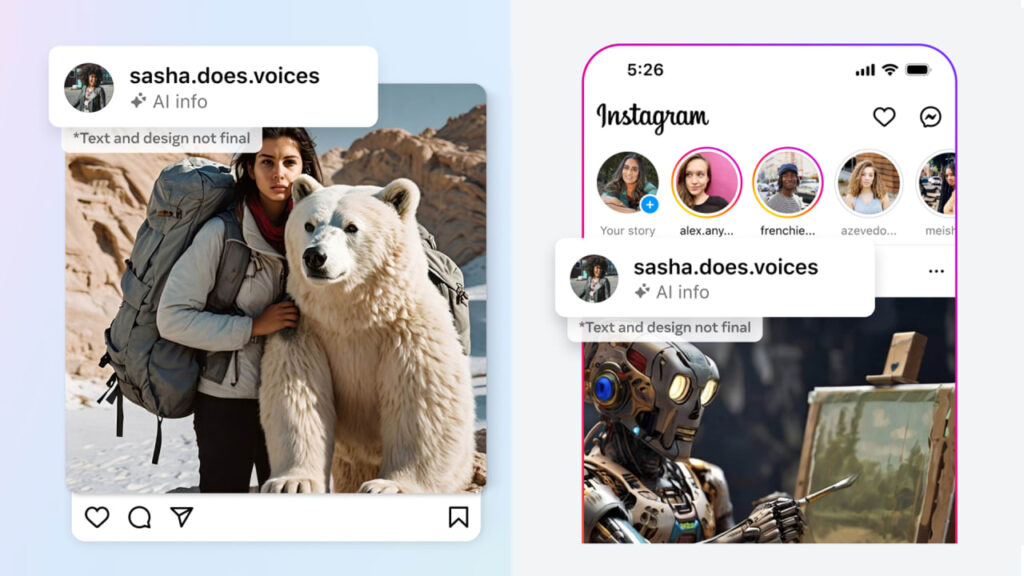

Meta introduced on Tuesday it’s taking steps to label AI-generated content material, together with misinformation and deepfakes, on its Fb, Instagram, and Threads social platforms. However its mitigation technique has some main holes, and it’s arriving lengthy after the specter of deepfakes has turn into actual.

Meta mentioned that “within the coming months” (after we’re within the thick of the 2024 presidential election), will probably be capable of detect AI-generated content material on its platforms created by instruments from the likes of Adobe and Microsoft. It’ll depend on the toolmakers to inject encrypted metadata into AI-generated content material, in line with the specs of an trade requirements physique. Meta factors out that it’s all the time added “seen markers, invisible watermarks, and metadata” to determine, and label, photographs generated by its personal AI instruments.

However these labeling instruments are for the nice guys; dangerous actors that unfold AI-generated mis/disinformation use lesser-known, open-source instruments to create content material that’s exhausting to hint again to the instrument or the creator. Or they could choose instruments that make it straightforward to disable the addition of metadata or watermarks.

There’s little information to counsel that Meta has the know-how to detect and label that form of content material at scale. The corporate says it’s “working exhausting” to develop classifier AI fashions to detect AI-generated content material that lacks watermarks or metadata. It additionally says it isn’t but capable of detect AI-generated movies or audio recordings. As an alternative, Meta says it’s counting on customers to label “photorealistic video or realistic-sounding audio that was digitally created or altered” once they submit it, and says it might “apply penalties” for people who don’t.

In a weblog submit, Meta public coverage man Nick Clegg portrays the issue of AI-generated mis/disinformation as an trade drawback, a society-wide drawback, and an issue of the long run. “Because it turns into extra widespread within the years forward, there shall be debates throughout society about what ought to and shouldn’t be performed to determine each artificial and non-synthetic content material.” However Meta controls, by far, the largest distribution community for such content material now—and the necessity to detect and label deepfakes is now, not just a few months from now. Simply ask Joe Biden or Taylor Swift. It’s too late to be speaking about future plans and approaches when one other high-stakes election cycle is already upon us.

“Meta has been a pioneer in AI growth for greater than a decade,” Clegg says. “We all know that progress and duty can and should go hand in hand.”

Meta has been creating its personal generative AI instruments for years now. Can the corporate actually say that it has devoted equal time, assets, and mind energy to mitigating the disinformation danger of the know-how?

Since its Fb days, and for greater than the previous decade, the corporate has performed a profound function in blurring the traces between fact and misinformation, and now it seems to be slow-walking its response to the following main menace to fact, belief, and authenticity.

[ad_2]

Source link