[ad_1]

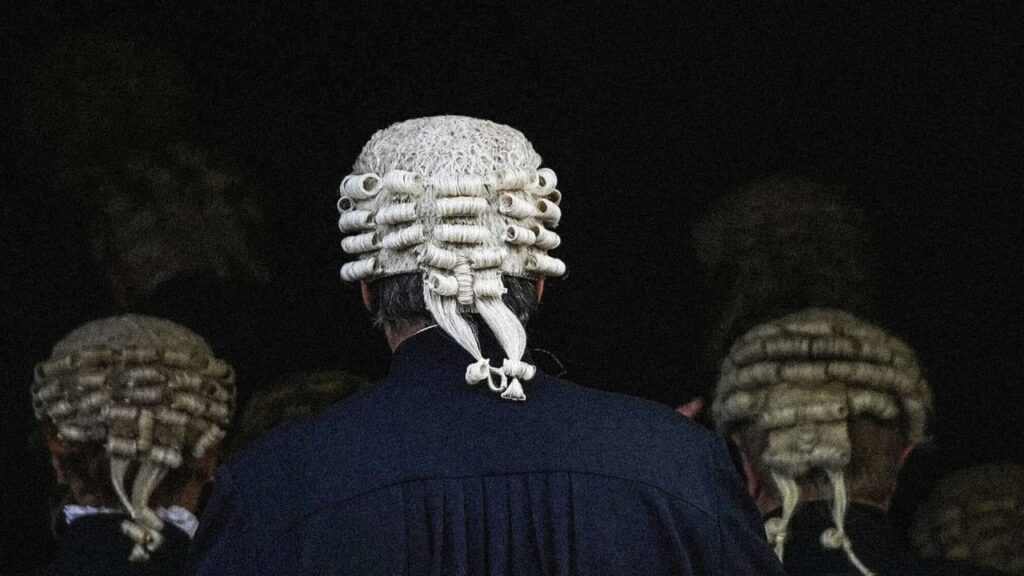

England’s 1,000-year-old authorized system—nonetheless steeped in traditions that embrace carrying wigs and robes—has taken a cautious step into the long run by giving judges permission to make use of synthetic intelligence to assist produce rulings.

The Courts and Tribunals Judiciary final month stated AI may assist write opinions however pressured it shouldn’t be used for analysis or authorized analyses as a result of the know-how can fabricate info and supply deceptive, inaccurate and biased info.

“Judges don’t must shun the cautious use of AI,” stated Grasp of the Rolls Geoffrey Vos, the second-highest rating decide in England and Wales. “However they need to make sure that they defend confidence and take full private accountability for all the pieces they produce.”

At a time when students and authorized consultants are pondering a future when AI may change attorneys, assist choose jurors and even resolve instances, the method spelled out December 11 by the judiciary is restrained. However for a occupation gradual to embrace technological change, it’s a proactive step as authorities and business—and society usually—react to a quickly advancing know-how alternately portrayed as a panacea and a menace.

“There’s a vigorous public debate proper now about whether or not and learn how to regulate synthetic intelligence,” stated Ryan Abbott, a regulation professor on the College of Surrey and creator of The Affordable Robotic: Synthetic Intelligence and the Regulation.

“AI and the judiciary is one thing persons are uniquely involved about, and it’s one thing the place we’re significantly cautious about preserving people within the loop,” he stated. “So I do assume AI could also be slower disrupting judicial exercise than it’s in different areas, and we’ll proceed extra cautiously there.”

Abbott and different authorized consultants applauded the judiciary for addressing the newest iterations of AI and stated the steering can be extensively seen by courts and jurists all over the world who’re keen to make use of AI or anxious about what it’d convey.

In taking what was described as an preliminary step, England and Wales moved towards the forefront of courts addressing AI, although it’s not the primary such steering.

5 years in the past, the European Fee for the Effectivity of Justice of the Council of Europe issued an moral constitution on using AI in courtroom techniques. Whereas that doc isn’t updated with the newest know-how, it did tackle core ideas corresponding to accountability and danger mitigation that judges ought to abide by, stated Giulia Gentile, a lecturer at Essex Regulation College who research using AI in authorized and justice techniques.

Though U.S. Supreme Courtroom Chief Justice John Roberts addressed the pros and cons of artificial intelligence in his annual report, the federal courtroom system in America has not but established steering on AI, and state and county courts are too fragmented for a common method. However particular person courts and judges on the federal and native ranges have set their very own guidelines, stated Cary Coglianese, a regulation professor on the College of Pennsylvania.

“It’s actually one of many first, if not the primary, printed set of AI-related pointers within the English language that applies broadly and is directed to judges and their staffs,” Coglianese stated of the steering for England and Wales. “I believe that many, many judges have internally cautioned their staffs about how current insurance policies of confidentiality and use of the web apply to the public-facing portals that provide ChatGPT and different such companies.”

The steering reveals the courts’ acceptance of the know-how, however not a full embrace, Gentile stated. She was vital of a piece that stated judges don’t must disclose their use of the know-how and questioned why there was no accountability mechanism.

“I believe that that is actually a helpful doc, however it will likely be very fascinating to see how this could possibly be enforced,” Gentile stated. “There is no such thing as a particular indication of how this doc would work in apply. Who will oversee compliance with this doc? What are the sanctions? Or perhaps there are not any sanctions. If there are not any sanctions, then what can we do about this?”

In its effort to take care of the courtroom’s integrity whereas shifting ahead, the steering is rife with warnings concerning the limitations of the know-how and attainable issues if a consumer is unaware of the way it works.

On the high of the checklist is an admonition about chatbots, corresponding to ChatGPT, the conversational software that exploded into public view final 12 months and has generated probably the most buzz over the know-how due to its potential to swiftly compose all the pieces from time period papers to songs to advertising and marketing supplies.

The pitfalls of the know-how in courtroom are already notorious after two New York attorneys relied on ChatGPT to write a legal brief that quoted fictional cases. The 2 had been fined by an offended decide who referred to as the work they’d signed off on “authorized gibberish.”

As a result of chatbots have the flexibility to recollect questions they’re requested and retain different info they’re supplied, judges in England and Wales had been advised to not disclose something non-public or confidential.

“Don’t enter any info right into a public AI chatbot that isn’t already within the public area,” the steering stated. “Any info that you just enter right into a public AI chatbot needs to be seen as being printed to all of the world.”

Different warnings embrace being conscious that a lot of the authorized materials that AI techniques have been educated on comes from the web and is commonly based mostly largely on U.S. regulation.

However jurists who’ve giant caseloads and routinely write choices which are dozens—even a whole bunch—of pages lengthy can use AI as a secondary software, significantly when writing background materials or summarizing info they already know, the courts stated.

Along with utilizing the know-how for emails or displays, judges had been advised they may use it to rapidly find materials they’re acquainted with however don’t have inside attain. However it shouldn’t be used for locating new info that may’t independently be verified, and it’s not but able to offering convincing evaluation or reasoning, the courts stated.

Appeals Courtroom Justice Colin Birss lately praised how ChatGPT helped him write a paragraph in a ruling in an space of regulation he knew nicely.

“I requested ChatGPT are you able to give me a abstract of this space of regulation, and it gave me a paragraph,” he advised The Regulation Society. “I do know what the reply is as a result of I used to be about to put in writing a paragraph that stated that, but it surely did it for me, and I put it in my judgment. It’s there and it’s jolly helpful.”

—By Brian Melley, Associated Press

[ad_2]

Source link