[ad_1]

Google is bringing generative AI out of the lab for a few new cell search options.

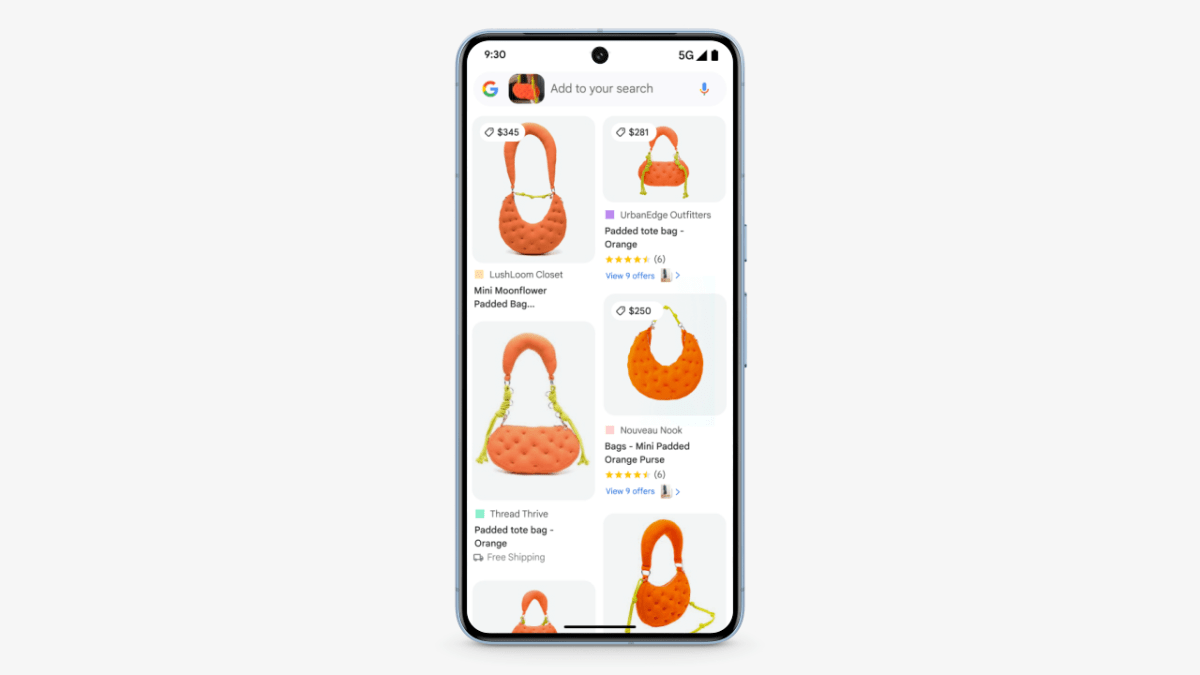

Beginning this week, the “Multisearch” characteristic in Google Lens will let customers level their cameras at an object and ask questions on it. AI will then present a solution together with hyperlinks to extra assets, even for customers who haven’t opted into the Search Generative Experience in Google Search Labs.

On January 31, Google’s additionally launching a “Circle to Search” characteristic for premium Android telephones, beginning with the Pixel 8 line and Samsung’s just-announced Galaxy S24 vary. It will let customers lookup pictures or textual content by highlighting them on the display screen, with some queries resulting in AI overviews as nicely.

Multisearch will get extra helpful

Google launched Multisearch in April 2022, and on the time pitched it primarily as a purchasing software. The thought was that in case you noticed a T-shirt sample that you simply appreciated, you would look it up with Google Lens and use textual content to seek for variations, resembling “skirt” or “purple.”

With AI summaries, Multisearch will present extra particulars in regards to the object itself, which in flip ought to make it helpful for extra than simply purchasing. Liz Reid, Google’s VP and GM of Search, provides the instance of digging out an outdated board sport and not using a field or directions, then utilizing Multisearch to determine the sport and lookup the foundations.

“Including generative AI, I believe we’re opening [Multisearch] as much as further verticals and extra kinds of questions that individuals need to ask,” she says.

Multisearch can also be an instance of Google determining the place to make generative AI search outcomes broadly out there. AI responses are nonetheless slower to load than conventional hyperlinks or featured snippets, which is one motive Google hasn’t rushed to make them a core search characteristic. That latency is much less of a difficulty with Multisearch, which is pushed by visuals and isn’t actually changing a conventional textual content search.

“Within the case of Multisearch, these are queries that individuals in any other case can’t do very nicely in any respect,” Reid says.

As earlier than, Google Lens will be discovered by tapping the digicam icon within the Google app or on Google’s house display screen widgets for iOS and Android.

Immediate Android searches

Generative AI can even play a job in Google’s Circle to Search characteristic for Android.

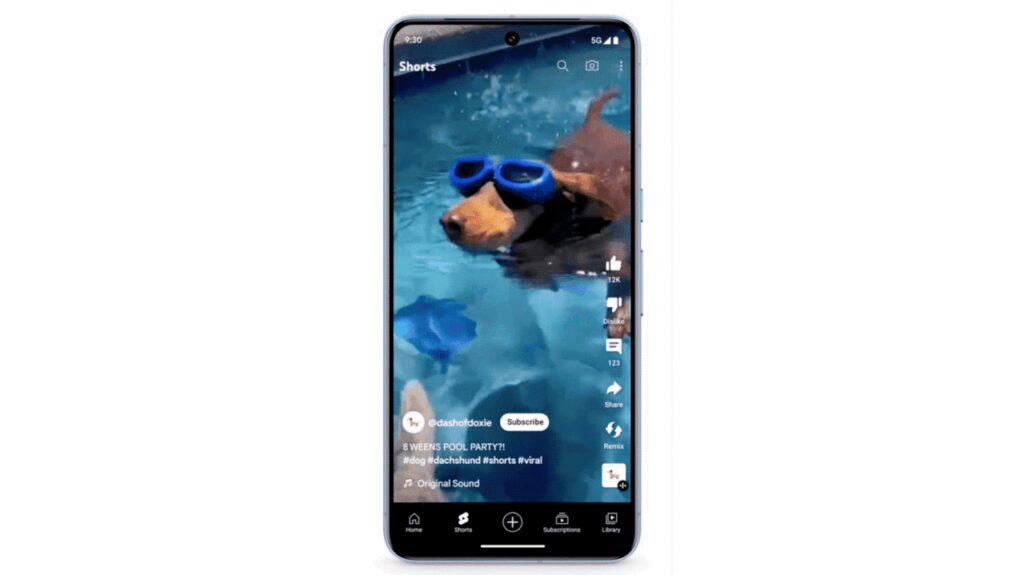

When it launches on January 31, customers will be capable of long-press the navigation bar or house button, then spotlight the textual content or picture they need to search. The outcomes will seem in a pop-up menu, which customers can dismiss to return to their earlier app.

[Photo: Google]

Reid says the characteristic ought to work by default in most apps, together with the digicam, video apps resembling YouTube, and messaging providers. She gave the instance of seeing a buddy point out a restaurant in a chat thread, then utilizing Circle to Search to lookup extra particulars.

“In a short time I can go and say, educate me in regards to the factor, and keep my context,” Reid says.

[Photo: Google]

A few of these searches will invoke generative AI as nicely. Circling a picture, for example, will deliver up the identical Multisearch menu as Google Lens, permitting customers to ask in regards to the merchandise and get AI-provided responses. AI solutions can even seem for sure textual content queries, although customers will nonetheless need to choose into Search Generative Expertise to get extra of them.

“You get loads of nice makes use of circumstances, the place the mix of Circle to Search plus generative AI feels actually pure,” Reid says.

Past the Pixel 8 and Galaxy S24 traces, Google gained’t say whether or not it’ll deliver the characteristic to different telephones or what the system necessities will likely be. As for the iPhone, Reid says Google want to increase the characteristic past Android, however wouldn’t say when or how which may occur.

Don’t name it Gemini

Final month, Google announced a new AI model called Gemini that’s meant to raised compete with OpenAI’s GPT-4. The announcement included a video demo that confirmed Gemini recognizing and discussing hand-drawn pictures on the fly (although the voice interplay and real-time nature of the demo turned out to be staged).

Whereas Google’s new Multisearch capabilities are considerably harking back to what the corporate confirmed off with Gemini, the corporate is fast to level out that it’s not utilizing Gemini within the product proper now. Per Google’s blog post in December, the corporate is experimenting with Gemini in Search and plans to combine it within the coming months.

Within the meantime, Reid says Search Generative Expertise is a testing floor for brand new concepts.

“As we be taught from SGE what’s actually useful, we’ll deliver probably the most useful use circumstances of generative AI to seek for everybody, like we’re doing with Multisearch as we speak,” she says.

Correction: Google launched Multisearch in 2022, not 2023 as said beforehand.

[ad_2]

Source link