[ad_1]

When Satya Nitta labored at IBM, he and a crew of colleagues took on a daring task: Use the most recent in synthetic intelligence to construct a brand new sort of private digital tutor.

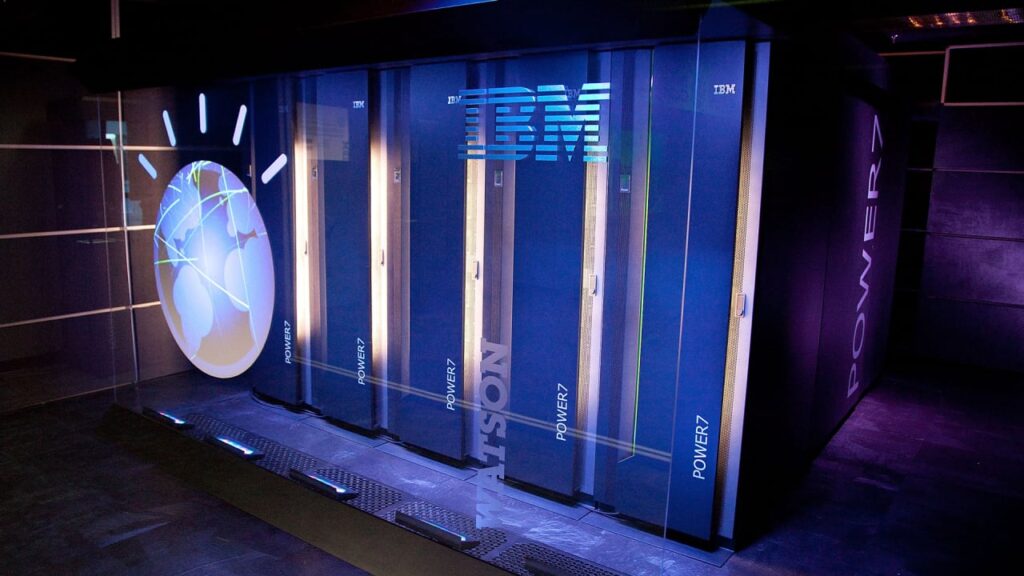

This was earlier than ChatGPT existed, and fewer individuals have been speaking concerning the wonders of AI. However Nitta was working with what was maybe the highest-profile AI system on the time, IBM’s Watson. That AI device had pulled off some large wins, together with beating humans on Jeopardy in 2011.

Nitta says he was optimistic that Watson might energy a generalized tutor, however he knew the duty could be extraordinarily tough. “I keep in mind telling IBM high brass that that is going to be a 25-year journey,” he just lately advised EdSurge.

He says his crew spent about 5 years attempting, and alongside the best way they helped construct some small-scale makes an attempt into studying merchandise, akin to a pilot chatbot assistant that was part of a Pearson online psychology courseware system in 2018.

Why AI received’t be a basic private tutor for many years (if ever)

However in the long run, Nitta determined that regardless that the generative AI expertise driving pleasure today brings new capabilities that may change schooling and different fields, the tech simply isn’t as much as delivering on turning into a generalized private tutor, and received’t be for many years not less than, if ever.

“We’ll have flying automobiles earlier than we can have AI tutors,” he says. “It’s a deeply human course of that AI is hopelessly incable of assembly in a significant method. It’s like being a therapist or like being a nurse.”

As an alternative, he cofounded a brand new AI firm, known as Merlyn Thoughts, that’s constructing different forms of AI-powered instruments for educators.

“The largest optimistic transformation that schooling has ever seen”

In the meantime, loads of firms and schooling leaders today are exhausting at work chasing that dream of constructing AI tutors. Even a latest White House executive order seeks to assist the trigger.

Earlier this month, Sal Khan, chief of the nonprofit Khan Academy, told the New York Times: “We’re on the cusp of utilizing A.I. for in all probability the largest optimistic transformation that schooling has ever seen. And the best way we’re going to try this is by giving each pupil on the planet an artificially clever however wonderful private tutor.”

Khan Academy has been one of many first organizations to make use of ChatGPT to attempt to develop such a tutor, which it calls Khanmigo, that’s at present in a pilot section in a collection of faculties.

Khan’s system does include an off-putting warning, although, noting that it “makes errors generally.” The warning is critical as a result of the entire newest AI chatbots endure from what are referred to as “hallucinations”—the phrase used to explain conditions when the chatbot merely fabricates particulars when it doesn’t know the reply to a query requested by a person.

AI consultants are busy attempting to offset the hallucination drawback, and probably the most promising approaches to this point is to herald a separate AI chatbot to verify the outcomes of a system like ChatGPT to see if it has doubtless made up particulars. That’s what researchers at Georgia Tech have been trying, for example, hoping that its system can get to the purpose the place any false data is scrubbed from a solution earlier than it’s proven to a pupil. But it surely’s not but clear that method can get to a degree of accuracy that educators will settle for.

At this vital level within the growth of latest AI instruments, although, it’s helpful to ask whether or not a chatbot tutor is the suitable objective for builders to move towards. Or is there a greater metaphor than “tutor” for what generative AI can do to assist college students and lecturers?

An ‘All the time-On Helper’ vs. a “a robotic that may learn your thoughts”

Michael Feldstein spends a variety of time experimenting with chatbots today. He’s a longtime edtech guide and blogger, and up to now he wasn’t shy about calling out what he noticed as extreme hype by firms promoting edtech instruments.

In 2015, he famously criticized guarantees about what was then the most recent in AI for schooling—a device from an organization known as Knewton. The CEO of Knewton, Jose Ferreira, mentioned his product could be “like a robotic tutor within the sky that may semi-read your thoughts and work out what your strengths and weaknesses are, all the way down to the percentile.” This led Feldstein to reply that the CEO was “promoting snake oil” as a result of, Feldstein argued, the device was nowhere close to to residing as much as that promise. (The belongings of Knewton have been quietly sold off a number of years later.)

So what does Feldstein consider the most recent guarantees by AI consultants that efficient tutors may very well be on the close to horizon?

“ChatGPT is unquestionably not snake oil—removed from it,” he tells EdSurge. “Additionally it is not a robotic tutor within the sky that may semi-read your thoughts. It has new capabilities, and we’d like to consider what sorts of tutoring features at the moment’s tech can ship that will be helpful to college students.”

He does suppose tutoring is a helpful technique to view what ChatGPT and different new chatbots can do, although. And he says that comes from private expertise.

Feldstein has a relative who’s battling a mind hemorrhage and has been turning to ChatGPT to offer him private classes in understanding the medical situation and his loved-one’s prognosis. As Feldstein will get updates from family and friends on Fb, he says, he asks questions in an ongoing thread in ChatGPT to attempt to higher perceive what’s taking place.

“After I ask it in the suitable method, it can provide me the correct amount of element about, ‘What do we all know at the moment about her possibilities of being OK once more?’” Feldstein says. “It’s not the identical as speaking to a health care provider, but it surely has tutored me in significant methods a couple of critical topic and helped me turn into extra educated on my relative’s situation.”

Whereas Feldstein says he would name {that a} tutor, he argues that it’s nonetheless necessary that firms not oversell the boundaries of their AI instruments. “We’ve completed a disservice to say they’re these all-knowing bins, or they are going to be in a number of months,” he says. “They’re instruments. They’re unusual instruments. They misbehave in unusual methods—as do individuals.”

He factors out that even human tutors could make errors, however most college students have a way of what they’re moving into after they make an appointment with a human tutor.

“Once you go right into a tutoring middle in your faculty, they don’t know every little thing. You don’t understand how educated they’re. There’s an opportunity they might inform you one thing that’s incorrect. However you go in and get the assistance you could.”

No matter you name these new AI instruments, he says, it will likely be helpful to “have an always-on helper you could ask inquiries to,” even when their outcomes are simply a place to begin for extra studying.

‘Boring’ however necessary help duties

What are new ways in which generative AI instruments can be utilized in schooling, if tutoring finally ends up not being the suitable match?

To Nitta, the stronger position is to function an assistant to consultants fairly than a substitute for an professional tutor. In different phrases, as an alternative of changing, say, a therapist, he imagines that chatbots will help a human therapist summarize and set up notes from a session with a affected person.

“That’s a really useful device fairly than an AI pretending to be a therapist,” he says. Although that could be seen as “boring,” by some, he argues that the expertise’s superpower is to “automate issues that people don’t love to do.”

Within the instructional context, his firm is constructing AI instruments designed to assist lecturers, or to assist human tutors, do their jobs higher. To that finish, Merlyn Thoughts has taken the weird step of constructing its personal so-called giant language mannequin from scratch designed for schooling.

Even then, he argues that the perfect outcomes come when the mannequin is educated to help particular schooling domains, by being educated with vetted knowledge units fairly than counting on ChatGPT and different mainstream instruments that draw from huge quantities of knowledge from the web.

“What does a human tutor do effectively? They know the coed, and so they present human motivation,” he provides. “We’re all concerning the AI augmenting the tutor.”

This text was syndicated from EdSurge. EdSurge is a nonprofit newsroom that covers schooling by means of authentic journalism and analysis. Join their newsletters.

Jeffrey R. Younger is an editor and reporter at EdSurge and host of the weekly EdSurge Podcast.

[ad_2]

Source link